History

History

Main article: History of computing hardware

Ancient and Medieval Devices

The ancient Greek-designed Antikythera mechanism, dating between 150 to 100 BC, is the world's oldest analog computer.

Many mechanical aids to calculation and measurement were constructed for astronomical and navigation use. The planisphere was a star chart invented by Abū Rayhān al-Bīrūnī in the early 11th century.[7] The astrolabe was invented in the Hellenistic world in either the 1st or 2nd centuries BC and is often attributed to Hipparchus. A combination of the planisphere and dioptra, the astrolabe was effectively an analog computer capable of working out several different kinds of problems in spherical astronomy. An astrolabe incorporating a mechanical calendar computer[8][9] and gear-wheels was invented by Abi Bakr of Isfahan, Persia in 1235.[10] Abū Rayhān al-Bīrūnī invented the first mechanical geared lunisolar calendar astrolabe,[11] an early fixed-wired knowledge processing machine[12] with a gear train and gear-wheels,[13] circa 1000 AD.

The sector, a calculating instrument used for solving problems in proportion, trigonometry, multiplication and division, and for various functions, such as squares and cube roots, was developed in the late 16th century and found application in gunnery, surveying and navigation.

The planimeter was a manual instrument to calculate the area of a closed figure by tracing over it with a mechanical linkage.

The slide rule was invented around 1620–1630, shortly after the publication of the concept of the logarithm. It is a hand-operated analog computer for doing multiplication and division. As slide rule development progressed, added scales provided reciprocals, squares and square roots, cubes and cube roots, as well as transcendental functions such as logarithms and exponentials, circular and hyperbolic trigonometry and other functions. Aviation is one of the few fields where slide rules are still in widespread use, particularly for solving time–distance problems in light aircraft.

The tide-predicting machine invented by Sir William Thomson in 1872 was of great utility to navigation in shallow waters. It used a system of pulleys and wires to automatically calculate predicted tide levels for a set period at a particular location.

The differential analyser, a mechanical analog computer designed to solve differential equations by integration, used wheel-and-disc mechanisms to perform the integration. In 1876 Lord Kelvin had already discussed the possible construction of such calculators, but he had been stymied by the limited output torque of the ball-and-disk integrators.[14] In a differential analyzer, the output of one integrator drove the input of the next integrator, or a graphing output. The torque amplifier was the advance that allowed these machines to work. Starting in the 1920s, Vannevar Bush and others developed mechanical differential analyzers.

Programming language

Main article: Programming language

Programming languages provide various ways of specifying programs for computers to run. Unlike natural languages,

programming languages are designed to permit no ambiguity and to be

concise. They are purely written languages and are often difficult to

read aloud. They are generally either translated into machine code by a compiler or an assembler before being run, or translated directly at run time by an interpreter. Sometimes programs are executed by a hybrid method of the two techniques.Input/output (I/O)

Main article: Input/output

I/O devices are often complex computers in their own right, with their own CPU and memory. A graphics processing unit might contain fifty or more tiny computers that perform the calculations necessary to display 3D graphics.[citation needed] Modern desktop computers contain many smaller computers that assist the main CPU in performing I/O.

Fifth generation computer

From Wikipedia, the free encyclopedia

The Fifth Generation Computer Systems project (FGCS) was an initiative by Japan's Ministry of International Trade and Industry, begun in 1982, to create a computer using massively parallel computing/processing.

It was to be the result of a massive government/industry research

project in Japan during the 1980s. It aimed to create an "epoch-making

computer" with-supercomputer-like performance and to provide a platform

for future developments in artificial intelligence. There was also an unrelated Russian project also named as fifth-generation computer (see Kronos (computer)).In his "Trip report" paper,[1] Prof. Ehud Shapiro (which focused the FGCS project on concurrent logic programming as the software foundation for the project) captured the rationale and motivations driving this huge project: "As part of Japan's effort to become a leader in the computer industry, the Institute for New Generation Computer Technology has launched a revolutionary ten-year plan for the development of large computer systems which will be applicable to knowledge information processing systems. These Fifth Generation computers will be built around the concepts of logic programming. In order to refute the accusation that Japan exploits knowledge from abroad without contributing any of its own, this project will stimulate original research and will make its results available to the international research community."

The term "fifth generation" was intended to convey the system as being a leap beyond existing machines. In the history of computing hardware, computers using vacuum tubes were called the first generation; transistors and diodes, the second; integrated circuits, the third; and those using microprocessors, the fourth. Whereas previous computer generations had focused on increasing the number of logic elements in a single CPU, the fifth generation, it was widely believed at the time, would instead turn to massive numbers of CPUs for added performance.

The project was to create the computer over a ten-year period, after which it was considered ended and investment in a new "sixth generation" project would begin. Opinions about its outcome are divided: either it was a failure, or it was ahead of its time.

Types of Computer

Computers come in all sorts of shapes and sizes. You are all familiar

desktop PCs and laptops, but did you know that computers can be as small

as your mobile phone (in fact your phone is a computer!) and as large as a room?!

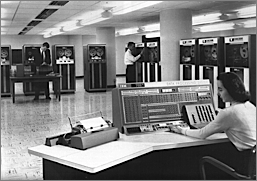

Mainframe Computer

A mainframe computer is a large computer, often used by large businesses, in government offices, or by universities.

Mainframe computers are typically:

Mainframe computers are typically:

- Powerful - they can process vast amounts of data, very quickly

- Large - they are often kept in special, air-conditioned rooms

- Multi-user - they allow several users (sometimes hundreds) to use the computer at the same time, connected via remote terminals (screens and keyboards)

From their invention back in the 1940s until the late 1960s, computers

were large, very expensive machines that took up the whole of a room

(sometimes several!) These were the only computers available.

The circuit-boards of these computers were attached to large, metal racks or frames. This gave them the nickname 'mainframe' computers.

The circuit-boards of these computers were attached to large, metal racks or frames. This gave them the nickname 'mainframe' computers.

Some of the most powerful mainframe computers can process so much data in such a sort time, that they are referred to as 'supercomputers'

Personal Computer (PC)

ASCII

From Wikipedia, the free encyclopedia

Not to be confused with MS Windows-1252 or other types of Extended ASCII.

This article is about the character encoding. For other uses, see ASCII (disambiguation).

| This article has an unclear citation style. (February 2013) |

ASCII codes represent text in computers, communications equipment, and other devices that use text. Most modern character-encoding schemes are based on ASCII, though they support many additional characters.

ASCII developed from telegraphic codes. Its first commercial use was as a 7-bit teleprinter code promoted by Bell data services. Work on the ASCII standard began on October 6, 1960, with the first meeting of the American Standards Association's (ASA) X3.2 subcommittee. The first edition of the standard was published during 1963,[3][4] a major revision during 1967,[5] and the most recent update during 1986.[6] Compared to earlier telegraph codes, the proposed Bell code and ASCII were both ordered for more convenient sorting (i.e., alphabetization) of lists, and added features for devices other than teleprinters.

ASCII includes definitions for 128 characters: 33 are non-printing control characters (many now obsolete)[7] that affect how text and space are processed[8] and 95 printable characters, including the space (which is considered an invisible graphic[9][10]:223).

The IANA prefers the name US-ASCII.[11] ASCII was the most common character encoding on the World Wide Web until December 2007, when it was surpassed by UTF-8, which includes ASCII as a subset

Computer virus

From Wikipedia, the free encyclopedia

Not to be confused with Worm (software) or Trojan Horse (computing).

Hex dump of the Blaster worm, showing a message left for Microsoft CEO Bill Gates by the worm programmer

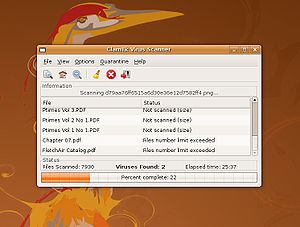

Virus writers use social engineering and exploit detailed knowledge of security vulnerabilities to gain access to their hosts' computing resources. The vast majority of viruses target systems running Microsoft Windows,[5][6][7] employing a variety of mechanisms to infect new hosts,[8] and often using complex anti-detection/stealth strategies to evade antivirus software.[9][10][11][12] Motives for creating viruses can include seeking profit, desire to send a political message, personal amusement, to demonstrate that a vulnerability exists in software, for sabotage and denial of service, or simply because they wish to explore artificial life and evolutionary algorithms.[13]

Computer viruses currently cause billions of dollars worth of economic damage each year,[14] due to causing systems failure, wasting computer resources, corrupting data, increasing maintenance costs, etc. In response, free, open-source antivirus tools have been developed, and a multi-billion dollar industry of antivirus software vendors has cropped up, selling virus protection to users of various operating systems of which Android and Windows are among the most victimized.[citation needed] Unfortunately, no currently existing antivirus software is able to catch all computer viruses (especially new ones); computer security researchers are actively searching for new ways to enable antivirus solutions to more effectively detect emerging viruses, before they have already become widely distributed.

Antivirus software

From Wikipedia, the free encyclopedia

"Antivirus" redirects here. For the antiviral medication, see Antiviral drug.

Antivirus software was originally developed to detect and remove computer viruses, hence the name. However, with the proliferation of other kinds of malware, antivirus software started to provide protection from other computer threats. In particular, modern antivirus software can protect from: malicious Browser Helper Objects (BHOs), browser hijackers, ransomware, keyloggers, backdoors, rootkits, trojan horses, worms, malicious LSPs, dialers, fraudtools, adware and spyware.[1] Some products also include protection from other computer threats, such as infected and malicious URLs, spam, scam and phishing attacks, online identity (privacy), online banking attacks, social engineering techniques, Advanced Persistent Threat (APT), botnets, DDoS attacks.[2]

Block diagram

From Wikipedia, the free encyclopedia

Block diagrams are typically used for higher level, less detailed descriptions that are intended to clarify overall concepts without concern for the details of implementation. Contrast this with the schematic diagrams and layout diagrams used in electrical engineering, which show the implementation details of electrical components and physical construction.

Computer data storage

From Wikipedia, the free encyclopedia

| It has been suggested that this article be merged into Computer memory. (Discuss) Proposed since November 2014. |

|

160 GB SDLT tape cartridge, an example of off-line storage. When used within a robotic tape library, it is classified as tertiary storage instead.

The central processing unit (CPU) of a computer is what manipulates data by performing computations. In practice, almost all computers use a storage hierarchy, which puts fast but expensive and small storage options close to the CPU and slower but larger and cheaper options farther away. Often the fast, volatile technologies (which lose data when powered off) are referred to as "memory", while slower permanent technologies are referred to as "storage", but these terms are often used interchangeably. In the Von Neumann architecture, the CPU consists of two main parts: control unit and arithmetic logic unit (ALU). The former controls the flow of data between the CPU and memory; the latter performs arithmetic and logical operations on data.